The Open University's "Introduction to Cyber Security" is a free online course -- with optional certificate -- that teaches the fundamentals of crypto, information security, and privacy; I host the series, which starts on Oct 13."

The course is designed to teach you to use privacy technologies and good practices to make it harder for police and governments to put you under surveillance, harder for identity thieves and voyeurs to spy on you, and easier for you and your correspondents to communicate in private.

I'm a visiting professor at the OU, and I was delighted to work on this with them.

We shop online. We work online. We play online. We live online. As our lives increasingly depend on digital services, the need to protect our information from being maliciously disrupted or misused is really important.

This free online course will help you to understand online security and start to protect your digital life, whether at home or work. You will learn how to recognise the threats that could harm you online and the steps you can take to reduce the chances that they will happen to you.

With cyber security often in the news today, the course will also frame your online safety in the context of the wider world, introducing you to different types of malware, including viruses and trojans, as well as concepts such as network security, cryptography, identity theft and risk management.

Last hour to buy! Thanks one last time everyone. We're so happy to be able to make this book the way we want to make it.

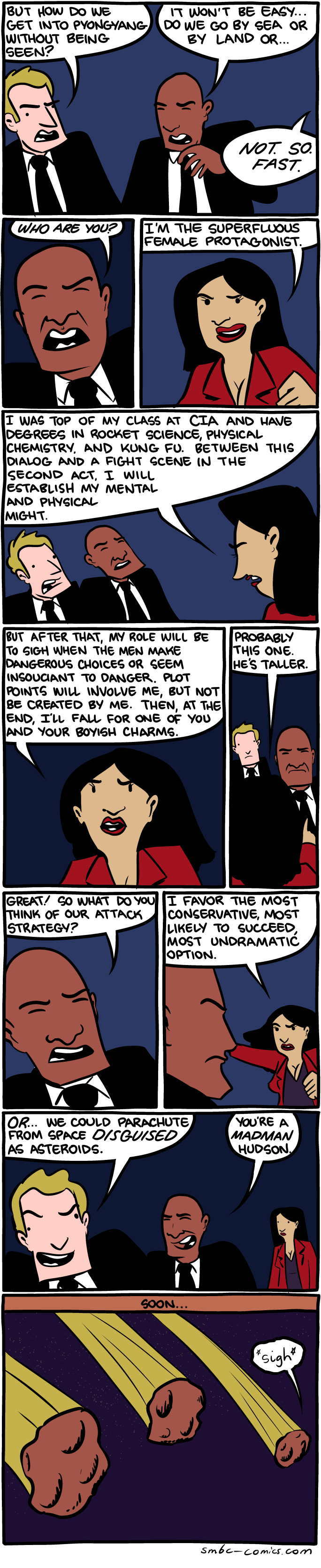

To give this years April Fools’ day a more analytical touch, we decided last week do a little poll on internet cartoons. We asked our friends and colleagues to select their favourite data related cartoon on the web, and organized a voting session to construct a top 5 list. (You can always share your own favourites in the comments.)

We proudly present you the winners of the April Fools’ 2014 Data Cartoon awards:

Number One: The Cloud

Number Two: A Study on Statistics

Number Three: Pacman Statistics

Number Four: Dilbert One

Number Five: Haloween Statistics

Number Six: Dilbert Two

Number Seven: XKCD Correlation

Disqualified for the competition, but still funny:

R-bloggers.com offers daily e-mail updates about R news and tutorials on topics such as: visualization (ggplot2, Boxplots, maps, animation), programming (RStudio, Sweave, LaTeX, SQL, Eclipse, git, hadoop, Web Scraping) statistics (regression, PCA, time series, trading) and more...

Mr. Jabez Wilson laughed heavily. “Well, I never!” said he. “I thought at first that you had done something clever, but I see that there was nothing in it after all.”

“I begin to think, Watson,” said Holmes, “that I make a mistake in explaining. ‘Omne ignotom pro magnifico,’ you know, and my poor little reputation, such as it is, will suffer shipwreck if I am so candid….”

The Red-Headed League, Arthur Conan Doyle

I recently was asked by a manager, an English major, who struggled with the quantitative part of the GMAT (Graduate Management Admissions Test) how to learn math. Many people struggle with mathematics in our increasingly mathematical world, ranging from balancing their checkbook to managing a budget at work to understanding the abstruse mathematical models that are invoked more and more often in public policy debates such as global warming. This article is an expanded version of my answer.

The most important rule to master mathematics is: if you get lost (and most people including “experts” get lost a lot), back up to what you know and start over. Don’t try to keep going; in most cases, you will only get more lost. If necessary, when you back up and start over, take smaller steps, find and use simpler, more concrete, and more specific learning materials and examples, and practice more at each step. Repeat this process, backing up, simplifying and practicing more until you find yourself making progress. In a nutshell, this is the secret of mastering mathematics for most people.

Mathematics is not Like English

Many mathematically oriented people have weak verbal skills. The verbal SAT scores for students at engineering and science schools such as MIT, Caltech, and Carnegie-Mellon are generally much weaker than their impressive quantitative/math scores. Conversely, many people with strong verbal skills are weak at mathematics. I am somewhat unusual in that I scored in the top 99th percentile on the verbal sections of both the undergraduate SAT and graduate GRE exams. I can compare and contrast learning Mathematics and learning English (and other humanities) better than most.

Mathematics differs from English and many other humanities. In mathematics, each step depends critically on each preceding step. Learning addition depends on knowing the numbers, being able to count. Multiplication is meaningless without a mastery of addition: three times four means “add three fours together (4 + 4 + 4)” or “add four threes together (3 + 3 + 3 + 3)”. Division is defined in terms of multiplication: twelve divided by three is the number which when multiplied by three gives twelve (the answer is four). This critical dependence of each step on a preceding step or steps is found in most mathematics from basic arithmetic to algebra and calculus to proving theorems in advanced pure mathematics to performing complex calculations by hand or using a computer.

In English and many other humanities, missing a step — not knowing the definition of a new word, skipping a few sentences or even pages in a rush, etc. — is frequently not a show-stopper. You can keep going. The meaning of the unknown word or the skipped passages will often become clear from context. It is important to get the big picture — the gist of a passage, article, or book — but specific details frequently can be missed or poorly understood without fatal consequences. You can still get an A in school or perform well at work. Of course, it is better to read and understand every word and detail, but it is usually not essential.

In mathematics, when you encounter a unknown term or symbol, it is critical to master its meaning and practical use before continuing. Otherwise, in the vast majority of cases, you will become lost and get more and more lost as you proceed. If a single step in a calculation, derivation of a formula, or proof of a theorem doesn’t make sense, you need to stop, back up if necessary, and master it before proceeding. Otherwise, you will usually get lost. This is a fundamental, qualitative difference between Mathematics and English (and many other humanities).

Don’t Compare Yourself to Prodigies

The popular image of mathematics and mathematicians is that mathematics is akin to magic and mathematicians are anti-social weirdos born with a magical power that enables them to solve differential equations in the cradle — no practice or hard work required. The movie Good Will Hunting (1997) features Matt Damon as a self-taught mathematical genius from a tough, poor Irish neighborhood in Boston and janitor at MIT who solves world-class math problems left on classroom blackboards while cleaning. The hit situation comedy The Big Bang Theory features Jim Parsons as Sheldon Cooper, a crazed theoretical physicist with the supposed symptoms of Asperger’s Syndrome who apparently published breakthrough research as a teenager. The 1985 movie Real Genius, set at a fictional university very loosely based on CalTech, features Gabriel Jarret as Mitch Taylor, a fifteen year old self-taught child prodigy with a terrible relationship with his unsupportive parents who is shown performing breakthrough research for the CIA as a (15 year old) freshman at “Pacific Tech.” Many more examples may be cited in movies, television, and popular culture.

Two points. First, these popular, mostly fictional portrayals of math and science prodigies are greatly exaggerated compared to actual prodigies, as impressive and intimidating as the real prodigies can sometimes be. Fictional prodigies, like Matt Damon’s Will Hunting, are frequently depicted as arising as if by magic or divine intervention in highly unlikely families and circumstances. In contrast, the most common background for math or science prodigies seems to be an academic family — Dad or Mom or both parents are professors — or a similar math and science rich family environment. Many prodigies that I met at CalTech or other venues have academic or other knowledge-rich family backgrounds. Not a single janitor from MIT ![]() .

.

Fictional prodigies are also frequently portrayed making major scientific or technological breakthroughs as teenagers. This is exceptionally rare in the real world. It is true there have been a fair number of major scientific and technological breakthroughs by people in their twenties, but teenagers are quite rare. Even Philo Farnsworth, who is often credited with devising electronic television at fourteen, did not have a working prototype of the electronic television set until he was twenty.

Most real math prodigies, like most or all chess prodigies, appear to achieve their remarkable performance through extensive study and practice, even if they have some inborn knack for mathematics. Curiously, many real prodigies do not achieve the accomplishments that one might expect later in life.

Second, genuine prodigies are very rare. Despite the portrayal in Real Genius most undergraduates at CalTech in the 1980′s were not real-world prodigies, let alone exaggerated fictional prodigies like Mitch Taylor and Chris Knight (played by Val Kilmer). Historically, especially prior to the transformation of math and science during and immediately after World War II which made it more difficult to pursue a career in math or science without very high quantitative scores on standardized tests and exams, many breakthroughs in mathematics and highly mathematical sciences were made by non-prodigies. The mathematician Hermann Grassmann was described as “slow” by some of his teachers. Minkowski famously referred to Einstein as “that lazy dog.” Grassmann and Einstein are both examples of “late bloomers” in math and physics.

In learning math, don’t compare yourself to prodigies, especially fictional prodigies. Most people who are proficient in math weren’t prodigies.

How to Learn Math

Again, to learn math, if you get lost, which is common and natural, back up to what you know, make sure you really know it, practice what you know some more and then work forward again. You may need to repeat this many times.

Sometimes a step may be difficult. Try to break the difficult step down into simpler steps if possible. Learn each simpler step in sequence, one at a time. Mathematics textbooks and other learning materials sometimes skip over key steps, presenting two or more steps as a single step, assuming this is obvious to the student (it often is not) or will be explained further in the classroom (it often is not). Consequently, be on alert that a single confusing step may hide several steps. If a single step is confusing, try to find a teacher, another student, or learning materials that can explain the step more clearly and in more specific detail.

Mathematics is an abstract subject and suffers from excessive abstraction in learning materials and teaching. A notorious example of this is the “New Math” teaching experiment of the 1960′s.

Some of you who have small children may have perhaps been put in the embarrassing position of being unable to do your child’s arithmetic homework because of the current revolution in mathematics teaching known as the New Math. So as a public service here tonight I thought I would offer a brief lesson in the New Math. Tonight we’re going to cover subtraction. This is the first room I’ve worked for a while that didn’t have a blackboard so we will have to make due with more primitive visual aids, as they say in the “ed biz.” Consider the following subtraction problem, which I will put up here: 342 – 173.

Now remember how we used to do that. three from two is nine; carry the one, and if you’re under 35 or went to a private school you say seven from three is six, but if you’re over 35 and went to a public school you say eight from four is six; carry the one so we have 169, but in the new approach, as you know, the important thing is to understand what you’re doing rather than to get the right answer. Here’s how they do it now….

Tom Lehrer, Introduction to New Math (The Song)

The rule (for most people) in math is: if a step proves too abstract, look for more concrete, specific learning materials and examples. If “balls in urns” (a notorious cliche in probability and statistics) is too abstract for you, look for explanations and examples with “cookies in jars” or something else more concrete and relevant to you. Something you can easily visualize or even get from the kitchen and use to act out the problem.

The simpler and the more concrete and specific you can make each step in learning math, the easier it will be for most people. Practice, practice, practice until you have mastered the step. It usually takes a minimum of three worked examples or other repetitions to remember something. Often many more repetitions, followed by continued occasional use, is needed for full mastery. Then, and only then, move on to the next step in the sequence.

Start with the simple, the concrete, and the specific. In time the abstract and the more complex will come. Don’t start with the abstract or the complex. If something is too abstract or complex for you, make it concrete and simplify if possible. Search the library, the used book store, the Web, anywhere you can, for simpler, more concrete learning materials and examples that work for you. Practice, practice, practice. Today, many tutorials, videos of lectures, and other materials (of widely varying quality) are available for free on the Web.

The Perils of Drinking from a Firehose

The critical dependency of each step on mastering the preceding step in learning math has some strong consequences for education. When I applied to CalTech many moons ago, the promotional materials from the university included a phrase comparing learning at CalTech to “drinking from a firehose.” This is the sort of rhetoric that appeals to young people, especially young men. Of course, no one in their right mind would try to drink from a firehose. This did not occur to me at the time.

In the 1980′s, perhaps to the present day, CalTech had a stunning drop out rate of about a third of its top rated, highly intelligent students.

It soon became apparent that much of the teaching by big-name researchers was rather mediocre. It did not compare well to the math and science teaching I had experienced previously. At the time, I lacked an adequate understanding of how math and science topics are successfully taught and learned to explain what the professors were doing wrong. It should be noted that success as a researcher or scholar appears to be unrelated to the ability and skill to actually teach in one’s field ![]() .

.

What was the problem? In general, the professors were rushing through the material, especially many foundational topics and concepts that they considered basic and obvious — sometimes even skipping them entirely. They often gave extremely advanced, complex, sometimes “trick” problems as introductory examples, homework, and exam problems. The problems may have been intellectually fascinating to a researcher with years of experience but quite inappropriate for an undergraduate class in mathematics or physics.

I have a still vivid memory of a second year mathematics course professor droning on about “linear functions” and “linear operators” until one frustrated student finally spoke up and asked: “What is linear?” Now, the professor did give a pretty good answer as to what linear meant in mathematics, but the point is that the idea was taken so much for granted by the big-name mathematics faculty that they had not even bothered to teach it in their introductory classes. ![]()

Looking back, most of the undergraduates at CalTech were coming from schools with excellent math and science teaching which followed many of the rules outlined in this article. There was enough use of simple examples and repetition built into the classes to ensure that a motivated student would absorb and master the material. In fact, in many cases, the very bright students who went on to CalTech probably felt they could go faster, hence the appeal of “drinking from a firehose.”

The lesson for anyone learning math is to make sure whatever course or curriculum that you take goes slow enough, taking the time to present each step in a simple, clear way, that you can fully absorb the material — learn and master each step before advancing to the next step. It should not be “drinking from a firehose.” Rather, you should feel you could go a bit faster. Not ten times faster, but there should be a cushion, more time and repetition than absolutely necessary, in case you have difficulties learning a particular step, get sick, break up with your girlfriend/boyfriend, or something else happens. Real life is full of unexpected setbacks.

Conclusion

Each step in learning mathematics depends critically on learning and mastering a preceding step or steps. The most important rule to master mathematics is: if you get lost (and most people including “experts” get lost a lot), back up to what you know and start over. Don’t try to keep going; in most cases, you will only get more lost. If necessary, when you back up and start over, take smaller steps, find and use simpler, more concrete, and more specific learning materials and examples, and practice more at each step. Repeat this process, backing up, simplifying and practicing more until you find yourself making progress. Don’t try to “drink from a firehose.” Be patient. Take your time and learn and master each step in sequence. In a nutshell, this is the secret of mastering mathematics for most people.

© 2014 John F. McGowan

About the Author

John F. McGowan, Ph.D. solves problems using mathematics and mathematical software, including developing video compression and speech recognition technologies. He has extensive experience developing software in C, C++, Visual Basic, Mathematica, MATLAB, and many other programming languages. He is probably best known for his AVI Overview, an Internet FAQ (Frequently Asked Questions) on the Microsoft AVI (Audio Video Interleave) file format. He has worked as a contractor at NASA Ames Research Center involved in the research and development of image and video processing algorithms and technology and a Visiting Scholar at HP Labs working on computer vision applications for mobile devices. He has published articles on the origin and evolution of life, the exploration of Mars (anticipating the discovery of methane on Mars), and cheap access to space. He has a Ph.D. in physics from the University of Illinois at Urbana-Champaign and a B.S. in physics from the California Institute of Technology (Caltech).

A great example of data mining in practice is the use of frequent itemset mining to target customers with coupons and other discounts. Ever wonder why you get a yogurt coupon at the register when you check out? That’s because across thousands of other customers, a subgroup of people with shopping habits similar to yours consistently buys yogurt, and perhaps with a little prompting the grocery vendor can get you to consistently buy yogurt too.

As random access memory (RAM) prices dropped (and virtual memory procedures within operating systems improved), it became much more feasible to process even extremely large datasets within active memory, reducing the need for algorithmic refinements necessary for disk-based processing. But by then, "data mining" (marketed as a way to increase business profits) had become such a popular buzz-word, it was used to refer to any type of data analysis.

A great example of a “Big Data” programming model is the MapReduce framework developed by Google. The basic idea is that any data manipulation step has a Map function that can be distributed over many, many nodes on a computing cluster that then filter and sort their own portion of the data. A Reduce function is then performed that combines the selected and sorted data entries into a summary value. This model is implemented by the popular Big Data system Apache Hadoop.

Thanks to Alex Fish for her thoughtful edits.

R-bloggers.com offers daily e-mail updates about R news and tutorials on topics such as: visualization (ggplot2, Boxplots, maps, animation), programming (RStudio, Sweave, LaTeX, SQL, Eclipse, git, hadoop, Web Scraping) statistics (regression, PCA, time series, trading) and more...